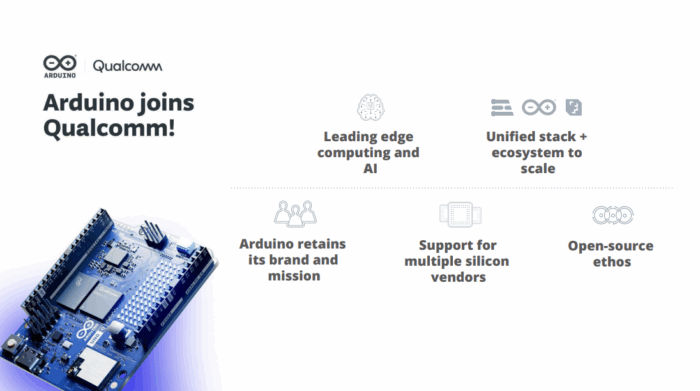

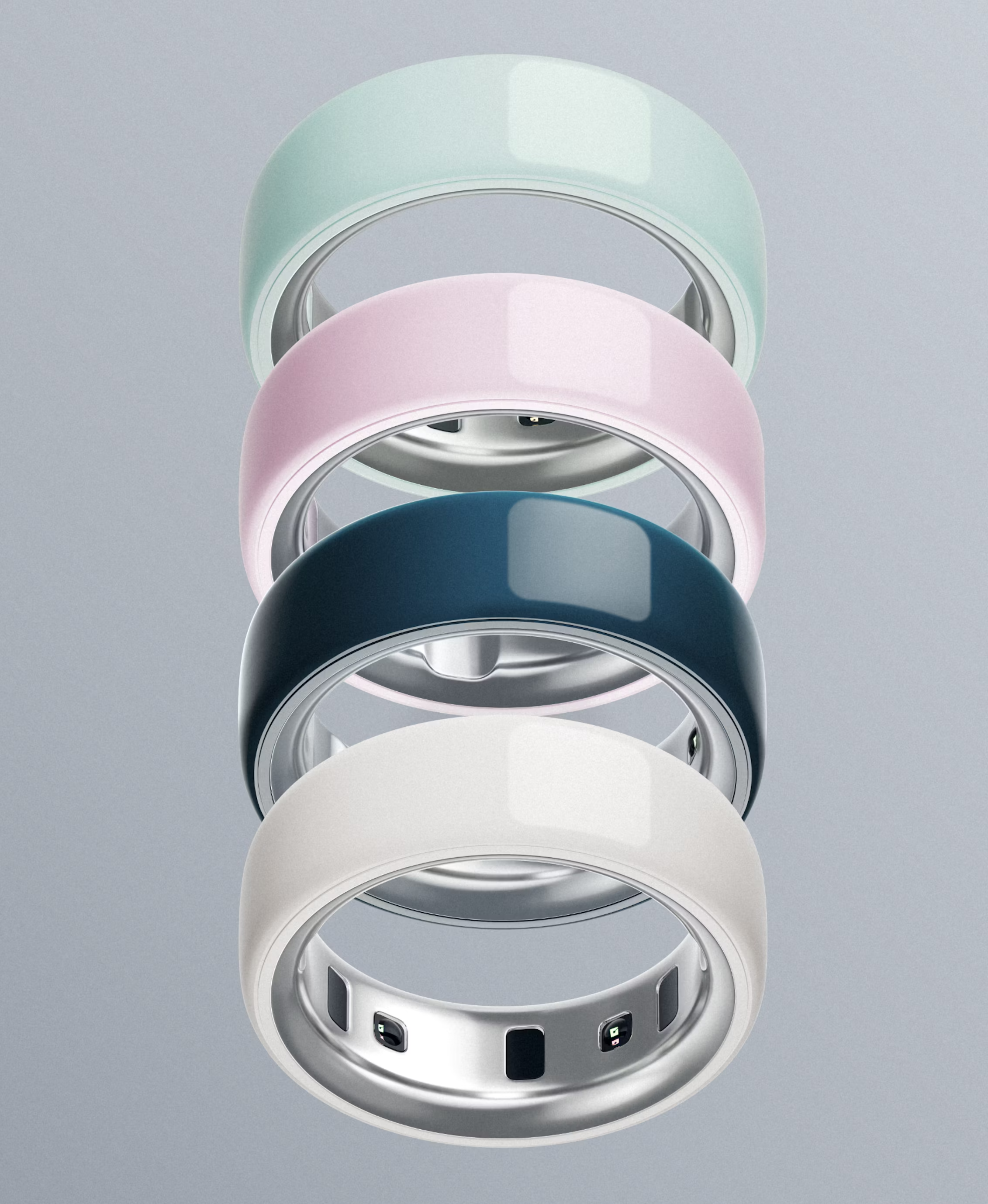

Last year, I wrote an article, sparked by a brainwave, on the Oura ring.

Since that time, Oura has continued its amazing growth. And I have continued to ruminate on their role, ambition, and trajectory in the health and wellness space.

Disclaimer: I am not affiliated with Oura, not really have had any convos with them in the past year (until this past week), nor am I privvy to anything they haven’t already mentioned in public. So don’t try to read too much between lines here.

Sticking to their knitting

If you recall my article (and side bars) from last year, I saw the Oura being used in clinical studies, and saw a strong interest from clinicians to apply Oura to clincal applications, for example, patient monitoring pre- and post-surgery. With that in mind, I kept my eyes open for any move in that direction, and spoke with many more on the possibility.

What I most noticed in the past year was that Oura doubled-down on wellness – the consumer side of healthcare, primarily women, and also capturing the worried well – those who are keen to keep an eye on their health.

That realization sort of evaporated my expectation of Oura moving more into the world I live in of digital therapeutics, digital health in the clinic, using devices together with drugs (PDURS), or being parts of clinical trial.

One main reason to doubt Oura moving into more clinically minded areas is not necessarily the need for deeper validation of measurements and models with the blessing of the FDA. I think a bigger reason is the organizational structure needed to shift from consumer to the business of healthcare: the profit margins are different, the sales channels are different, the marketing is different, the needed sales consulting and solution design (both which I have done for so many years) are way different, the aftercare and integration services are different.

I have seen so many orgs try such shifts. Oura would need to build out that organization, stomach it until they get to a growth period, justify patience to more easy-money-making parts of the org.

Hold that thought.

Clinical investigation

While I didn’t think Oura would get into clinical investigation or clinical care, I did keep my eyes out for where Oura keeps popping up.

I have an Oura ring and the ring comes up in so many conversations I have with hospitals, pharma, doctors, and scientists. At HLTH this year, Oura was mentioned in so many talks and conversations. At this year’s CNS Summit (neuroscience and clinical trials) the Oura ring was mentioned many times as well, especially in data capture, therapy, and digital biomarkers and endpoints.

I did get one new piece of info: While talking to a company that makes a solution for evaluating Myesthenia Gravis, I asked if they thought of using the Oura ring. Indeed, they had, but for what they wanted, they ended up making their own device. The ring, tho a great form factor and with great sensors, isn’t really made for the rigors of clinical trials, for example, seems like when battery gets low, not all data is collected. And the need to do things thru the app, setting up the API access, the need to recharge weekly doesn’t fit well with the clinical trials this guy’s company runs.

Hm. Interesting.

Digital endpoints

At the CNS Summit, there were lots of discussions around digital endpoints. Indeed, DEEP Measures, a Finnish company, was there and presenting what they do. DEEP Measures helps clients with the process – from ideation to creation to deployment – of establishing a digital biomarker that can be used as an endpoint.

I was reminded that this process is not just about getting an OK from the FDA (BTW, AFAIK, there are no approved primary digital endpoints in the US). One needs to establish also some standard way so that everyone using the endpoint measures and calculates things the same way.

For example, what do we mean by a sleep score? How is it measured? What is measured? Where does any device get place – wrist, chest, leg?

Turn that back to Oura – are they even set up to do that kind of work?

Hold that thought as well.

Oura as platform

One other thought I had in the past year was: what to do with folks who want to build something around the ring and the ring’s data, say patient monitoring solutions, like my Parkinson’s example, or a clinical trial service?

As I was seeing such requests to be outside Oura’s wellness focus, I didn’t expect Oura to have the people or organizational structure to serve such partners. Hence, I envisioned solution builders using Oura as a platform, but without connecting to Oura, the company, basically using the great API and off-the-shelf rings on their own.

The corollary to this is that if Oura were to want to support these folks, they would need to set up a sales engineering team and customer experience team, much like other hardware or software vendors do. And there would also have to be a strong developer relations team to harden the APIs for high use, drive roadmaps (for customers, not for internal dev), educate and enable.

Again, this speaks to the need for an organizational structure they might not have at this moment.

Hold that thought.

Now let’s unhold those thoughts

To summarize the above, I expected Oura to stick to their knitting, sticking to wellness and consumer digital health, and not building an organizational structure to address clinical research or clinical care, and the related solutions developers. I also started to think the the Oura ring, while an amazing piece of kit, might not get out of the nice-to-have clinical research usage by those in the know, those willing to work around the shortcomings to add to the almost 100 clinical trials the ring had been used in, or to use in clinics by forward-thinking care providers.

While true, I do not think what held for 2025 will hold for 2026.

There have been some interesting hires in the past year of folks with more medical and clinical care chops. And, even more so, in the past month, right after a whopping Series E raise, their press release teases so much of where things are going for Oura in the clinical care space.

I think, indeed, Oura has been sticking to their knitting, building their consumer juggernaut. But now, they are clearly looking to take the risk and enter the healthcare field.

The press release reaffirms their commitment to wellness “elevating Oura Ring from a passive tracker to a trusted early-warning system that helps people take proactive steps toward long-term health.”

But they also mention they are pursuing FDA-approval for some of their features – starting with new blood pressure features.

By integrating continuous Oura Ring data with research-grade measurements, this study aims to uncover how subtle physiological shifts can indicate chronic elevated blood pressure risk. It represents one of ŌURA’s most ambitious preventive health initiatives to date in terms of the scale of data and updates to the algorithm, reinforcing Oura Labs’ mission to bridge the gap between consumer wearables and clinical research.

and from CMO Dr. Ricky Bloomfield

“By combining rigorous research with continuous, real-world data, we can identify early patterns that often go unnoticed in traditional healthcare settings. The Blood Pressure Profile study and Cumulative Stress feature mark an important step forward in translating science into everyday guidance—helping people recognize how small physiological changes today can influence long-term health outcomes.”

“Rigorous research,” is already at the heart of Oura. They are heavily science-based, which is why they are so strong in consumer wellness, where so many are not science-based. But also, the research part of the company is well-suited to engage with the FDA, as they already have the mind-set needed.

Tho when they say “continuous, real-world data,” words right out of healthcare and pharma, they are signaling the opportunity they have and what they bring to the table.

And the last words of Ricky’s quote are strong too: “long-term health outcomes.” The digital health world has always had an eye on prevention, continuous monitoring, and bending the arc of long-term outcomes.

Seems like Oura is embracing clinical healthcare finally.

In case you missed the point

Lastly, in the press release, they mention their health report (read the overview and download it here).

They clearly pitch it as an “exploration of how continuous, personalized insights are transforming care delivery and preventive health.” And they are also clear that this report is for “healthcare innovators, payers, researchers, and clinicians.”

Very clear they want to show their impact in many health areas and craft a framework of use for these health practitioners and their patients (no doubt with the One Ring at the center).

This report is them placing their stake in the ground (and justifying their valuation), to show they can go from a successful consumer wearables company to a legitimate healthcare solutions delivery company.

They lay out the foundation they already have, from the huge volume of real-world data, to numerous publications, to experienced staff, to a range of strong strategic partnerships. So while I saw them stick to their knitting thru 2025, they were pulling together the foundation for their next move for 2026.

The key still will be whether they can actually build the organizational structure to deliver on this vision: the sales engineering, the clinical-grade support, the FDA regulatory pathway management. But they’ve certainly laid the intellectual and partnership groundwork to make the attempt credible.

In summary

Clinical care and research are areas I had hoped Oura would get into, a good adjacency for them to expand into. Seems like 2026 is the time for them to make it so.

Tho, I still think there’s going to be huge challenge building that organizational structure to deliver on their mission of “transforming healthcare delivery with innovative solutions.”

The money they raised sure might make that easier. Also, I’ve seen they are quite capable of hiring top talent due to their mission, their product, and their growth.

Exciting times ahead for Oura.

I look forward to watching them grow even more in 2026.