I’ve always been straddling the physical and the digital – thinking of how the two worlds interact and complement each other, and what it means for us stuck in the middle. And, in the last few years, thanks to price, ease of use, tools, and communities, I have become more hands-on mixing the physical and digital (and sublime) worlds.

Being both in the digital and physical has also led me to think of data, analytics, data fluency, sensors, and users (indeed, also helping others think and do in these areas, too). ML and AI, predictive analytics and optimization, and the like were all part of this thinking as well. So, with much interest, in the last two or so years I’ve been dabbling with generative AI (no, not just ChatGPT, but much earlier, with DALL-E and Mind Journey).

Mixing it

In my usual PBChoc thinking, I started wondering what would be the fusion of the physical and these generative AI tools. And, despite spending so much of my life writing, I could not articulate it. I tend to sense trends and visualize things long before I can articulate them. So I read and listen for those who can help me articulate.

I wrote recently about ‘embodied AI‘ – the concept of AI in the physical world. Of course, folks think humanoid robots, but I think smart anything (#BASAAP). Now I see folks use the term ‘physical AI’.

New something?

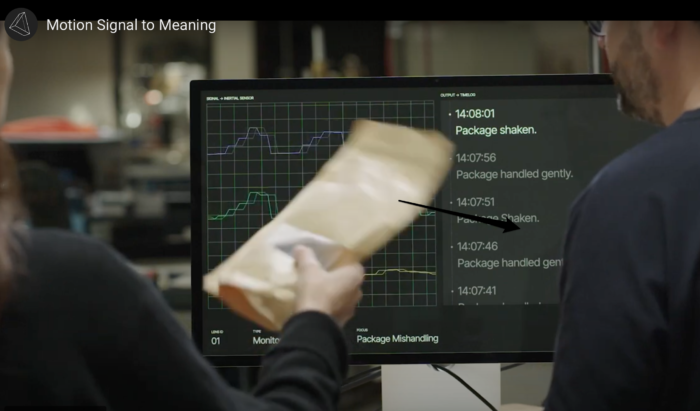

Not sure how I missed these guys, but I stumbled upon Archetype.ai. They are a crack team of ex-Google smarties who have set off to add understanding of the physical world to large transformer models – physical AI.

At Archetype AI, we believe that this understanding could help to solve humanity’s most important problems. That is why we are building a new type of AI: physical AI, the fusion of artificial intelligence with real world sensor data, enabling real time perception, understanding, and reasoning about the physical world. Our vision is to encode the entire physical world, capturing the fundamental structures and hidden patterns of physical behaviors. from What Is Physical AI? – part 1 on their blog

This is indeed what I was thinking. Alas, so much of what they are talking about is the tech part of it – what they are doing, how they are doing, their desire to be the platform and not the app maker.

At Archetype, we want to use AI to solve real world problems by empowering organizations to build for their own use cases. We aren’t building verticalized solutions – instead, we want to give engineers, developers, and companies the AI tools and platform they need to create their own solutions in the physical world. – from What is Physical AI? – part 2 on their blog

Fair ‘nough.

And here they do make an attempt to articulate _why_ users would want this and what _users_ would be doing with apps powered by Newton, their physical AI model. But I’m not convinced.

Grumble grumble

OK, these are frakkin’ smart folks. But there is soooo much focus on fusing these transformer models to sensors, and <wave hands> we all will love it.

None of the use cases they list are “humanity’s most important problems”. And of the ones they list, I have already seen them done quite well years ago. And I become suspect when use cases for a new tech are not actually use cases that are looking for new tech. Indeed, I become suspect when the talk is all about the tech and not about the unmet need that the tech is solving.

Of course, I don’t really get the Archetype tech. Yet, I am not captivated by their message – as a user. And they are clear that they want to be the platform, the model, and not the app maker.

Again, fair ‘nough.

But at some level, it’s not about the tech. It’s about what folks want to do. And I am not convinced they are 1) addressing an unmet need for the existing use cases they list; 2) there isn’t any of their use cases listed that _must_ use their model, a large revolutionary change sorta thing.

Articulate.ai

OK, so I need to think more about what they are building. I have spent the bulk of the last few decades articulating the benefits of new tools and products, and inspiring and guiding folks on how to enjoy them. So, excuse me if I have expectations.

I am well aware these past few decades that we are instrumenting the world, sensors everywhere, data streaming off of everything, and the need for computing systems to be physically aware.

I’m just not sure that Archetype is articulating the real reason for why we need them to make sense of that world using their platform.

Hm.

Watch this space.

Image from Archetype.ai video

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25522150/Phone_Mirroring_app.jpg)